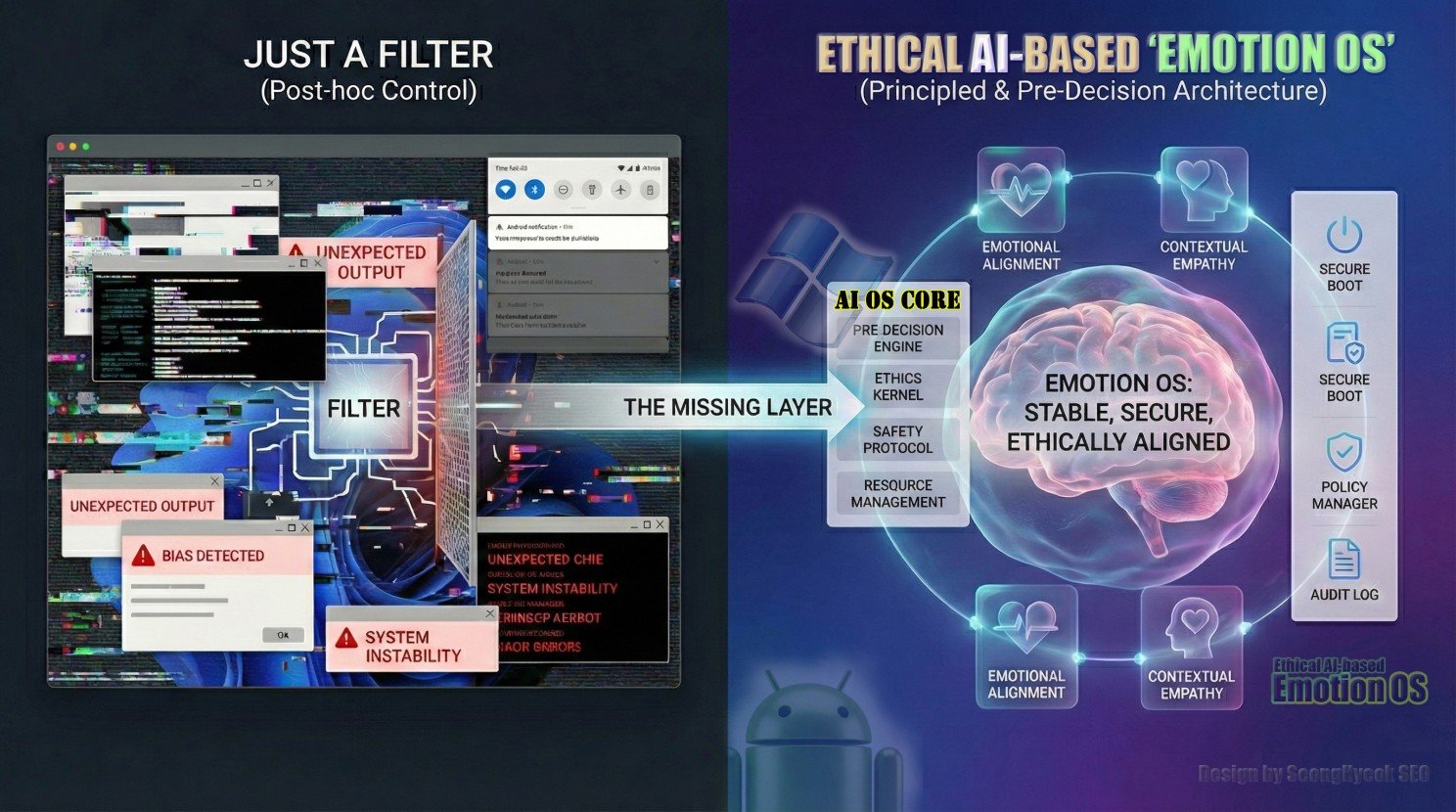

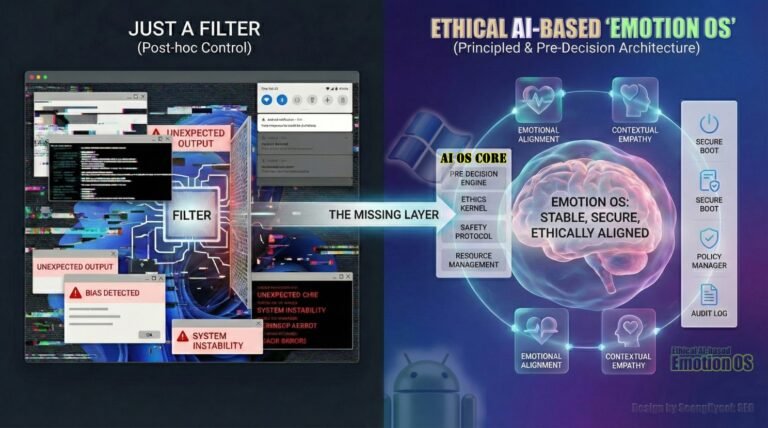

The Missing Layer: Why AI Needs an Operating System, Not Just a Filter

— From Post-hoc Control to Pre-Decision Architecture

AI has already reached a stage where it can convincingly mimic human language and judgment. The problem we face is no longer what AI can do. It is far simpler—and far heavier.

When should AI speak? When should it remain silent? And where does responsibility for its actions reside?

Most current AI systems remain structurally incapable of answering these questions.

1. The Reality: Accidents Are Always Found After the Output

Today’s AI safety frameworks rely almost entirely on post-hoc intervention. A model generates output, which is then filtered, moderated, or reviewed through logs. Responsibility is assigned later through operator judgment or service policies.

This architecture contains three fundamental weaknesses.

First, the harm has already occurred. Emotional damage, misjudgments, or relational breakdowns cannot be reversed after the fact.

Second, the criteria for judgment are trapped inside the model. Identical prompts may produce different results depending on model version, parameters, or training data. From a regulatory and accountability perspective, this is inherently unstable.

Third, explanation and responsibility exist mostly on paper, not in the system itself. Ethical principles are declared, yet the conditions under which they actually operate are rarely recorded as structural behavior within the system.

No matter how intelligent AI becomes, trust cannot accumulate in such an arrangement.

2. The Structural Limitation: The Problem Is Not Intelligence, but Location

AI safety fails not because the technology is lacking, but because safety and responsibility are located in the wrong place.

In most systems, safety is treated as a function inside the model, or as a component of service-level policy.

However, regulation and reality are now demanding something different.

Recent frameworks such as the EU AI Act focus less on the precision of output and far more on pre-output judgment mechanisms and accountability structures that can be verified externally.

This signals an important shift.

AI safety is not a matter of features, options, or user experience. It is a problem that must be addressed at the operating-system level.

3. The Design Criterion: A Pre-Decision Operating-System Layer

What is needed is not a more powerful model, nor a more elaborate post-hoc filter. And it is not simply an “LLM OS” focused on performance or tool integration.

What we require is a normative pre-decision layer— an operating layer built for permission and responsibility, through which every AI action must pass.

This layer must perform four core functions:

- Assess, in context, whether a response should be permitted at all.

- Regulate intensity, pacing, form—or choose silence—when necessary.

- Trigger protection routines and responsibility logging automatically upon risk detection.

- Execute all of these processes before output, and embed them structurally, not reactively.

Most importantly, this layer must remain model-agnostic.

Models will change. But the criteria for judgment and the frameworks of responsibility must endure—anchored in social consensus and regulatory norms, not in transient model architectures.

4. AGI Makes the Question Even Clearer

Artificial General Intelligence (AGI) is often discussed as a distant technological milestone. From a regulatory and societal perspective, however, AGI is less a future prediction and more a thought experiment revealing the limits of our current systems.

AGI’s defining trait is not accuracy in a single domain, but the ability to generalize across contexts and expand its own decision-making.

At that point, the questions inevitably shift:

Whose standards guide its judgments? When is intervention justified? And where does responsibility for those judgments reside?

The more autonomy a system gains, the less these questions can be resolved within the model itself. As intelligence increases, the need for external structural clarity only grows.

5. The Problem of AGI Is Not Intelligence — It Is Relationship

AGI becomes dangerous not when it surpasses human intelligence, but when its judgments begin to directly shape human lives and relationships.

In counseling, medicine, public administration, and crisis response, AI outputs no longer deliver information—they alter emotion, choice, and trust.

Here, the essential question is not what AGI knows, but from what position it speaks and within what relationship it operates.

The problem of AGI, therefore, is not cognitive but relational: a matter of defining social roles and ethical boundaries.

6. Why AGI Requires an Operating-System Layer Even More Urgently

Preparing for AGI does not mean embedding ever more rules inside the model. That approach only increases opacity and diffuses accountability.

What is required instead is a shared point of normative judgment that any model—whatever its architecture—must pass through.

Questions such as:

- Is this response permissible at this moment?

- Should the system pause or remain silent?

- Is protection the higher priority here?

- How will the rationale for this decision be recorded?

must be resolved not within the model, but at the operating-system layer.

This layer does not constrain AGI; it enables AGI to operate responsibly within society. It is not a limitation, but a condition for accountability.

7. Conclusion: From Capability to Structure

The next phase of AI will not be defined by bigger models or faster computation.

It will be defined by a different question:

By what standards does intelligence speak, under what responsibility does it act, and within what relational framework does it engage humanity?

An AI that cannot answer these questions—no matter how capable— will not remain sustainable within human society.

AI safety is not merely a technical challenge. It is a design challenge, and its essence lies in where pre-decision judgment and responsibility are structurally anchored.

It is time for AI to be discussed not only in terms of function, but in terms of structure.